- Home

- Media Center

-

Events

- Wuzhen Summit

- Regional Forums

- Practice Cases of Jointly Building a Community with a Shared Future in Cyberspace

- World Internet Conference Awards for Pioneering Science and Technology

- The Light of Internet Expo

- Straight to Wuzhen Competition

- Global Youth Leadership Program

- WIC Distinguished Contribution Award

- Membership

- Research & Cooperation

- Digital Academy

-

Reports

- Collection of cases on Jointly Building a Community with a Shared Future in Cyberspace

- Collection of Shortlisted Achievements of World Internet Conference Awards for Pioneering Science and Technology

- Reports on Artificial Intelligence

- Reports on Cross—Border E—Commerce

- Reports on Data

- Outcomes of Think Tank Cooperation Program

- Series on Sovereignty in Cyberspace Theory and Practice

- Other Achievements

- About WIC

- 中文 | EN

DeepSeek releases upgrade to R1 model with enhanced reasoning capabilities

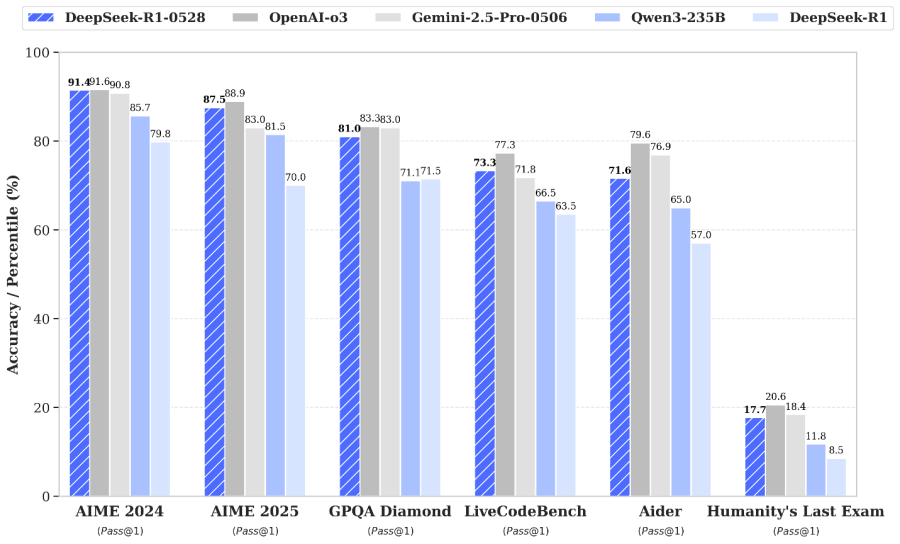

DeepSeek has announced the release of DeepSeek-R1-0528, an upgrade to their reasoning-focused language model that brings improvements in mathematical problem-solving, programming, and logical reasoning tasks. This latest version represents advancement in the model's ability to handle complex reasoning challenges through enhanced computational resources and algorithmic optimizations.

[Photo/DeepSeek]

The most striking improvement comes in the model's mathematical reasoning capabilities, particularly evident in its performance on the AIME 2025 test where accuracy jumped from 70% in the previous version to an impressive 87.5%. This enhancement stems from the model's increased thinking depth during reasoning processes, with the average token usage per AIME question expanding from 12,000 tokens to 23,000 tokens, demonstrating more thorough analytical processing.

Beyond mathematics, the upgraded model shows remarkable progress across multiple domains. In programming challenges, DeepSeek-R1-0528 achieved a 73.3% pass rate on LiveCodeBench compared to the previous version's 63.5%, while also improving its Codeforces rating from 1530 to 1930.

One of the notable achievements of this release is the introduction of DeepSeek-R1-0528-Qwen3-8B, a distilled version that brings the reasoning capabilities to a smaller 8-billion parameter model. This compact version achieves 86.0% accuracy on AIME 2024, remarkably matching the performance of much larger models like Qwen3-235B-thinking while using significantly fewer computational resources.

DeepSeek also reports reduced hallucination rates and enhanced function calling capabilities in this upgrade, making the model more reliable for real-world applications.

The model remains available through their chat interface with a "DeepThink" toggle, while also offering API access through their platform.

This release positions DeepSeek-R1-0528 as a competitive alternative in the reasoning model landscape, with performance approaching that of leading models like OpenAI's O3 and Google's Gemini 2.5 Pro.

Unlike these proprietary alternatives, DeepSeek-R1 remains open-source under the MIT license, offering transparency and flexibility for research and commercial applications.

The successful distillation to smaller model sizes particularly opens new possibilities for researchers and organizations seeking powerful reasoning capabilities without the computational overhead of larger models.

The World Internet Conference (WIC) was established as an international organization on July 12, 2022, headquartered in Beijing, China. It was jointly initiated by Global System for Mobile Communication Association (GSMA), National Computer Network Emergency Response Technical Team/Coordination Center of China (CNCERT), China Internet Network Information Center (CNNIC), Alibaba Group, Tencent, and Zhijiang Lab.